AMD recently announced the new Secure Encrypted Virtualization (SEV) extension that intends to protect virtual machines against compromised hypervisors/Virtual Machine Monitors (VMMs). An intended use-case of SEV is to protect a VM against a malicious cloud provider. All memory contents are encrypted and the cloud provider cannot recover any of the data inside. The root of trust lies with AMD and they control all access to the keys.

SEV is a rather new feature and so far, I only came across the announcement of the patch [2] and a presentation at a recent KVM forum [1]. The goal of this blog post is to compare the two new technologies AMD SEV [1] and Intel SGX [4] and relate it to the Overshadow [5] research paper.

What Overshadow showed is that it is surprisingly difficult to protect a lower privileged component from a higher privileged component without significantly redesigning the lower privileged component. The lower privileged component (the VM) so far trusted the VMM, now if the VMM is removed from the trusted computing base, any part of the VM that interacts with the VMM must be vetted and protected against nasty attacks like corrupted data or time-of-check-to-time-of-use attacks. This then likely allows an attacker to initiate a ROP/JOP attack or even inject code with knowledge of writable/executable regions. This allows the adversary to simply extract data using the existing I/O channels or spawn a remote shell. But more on that in the security discussion.

Overshadow

Overshadow [5] removes the OS from the trusted computing base, protecting application data against compromise from a malicious (or corrupted) OS. Overshadow does not rely on special hardware and is implemented at the VM level. There are several other trust separation mechanisms but Overshadow was the first to remove trust from a large OS kernel while keeping most of the functionality of the OS.

The VMM presents two different memory views to executing code, depending on the privilege level. Whenever the OS accesses pages application-level pages, they are encrypted, protecting integrity and confidentiality of the data. The VMM allows the application process to access the unmodified pages but whenever the OS accesses the pages, they are transparently encrypted. This allows the OS to still handle memory allocation and bookkeeping but keeps the contents of the memory pages hidden. The cloaking/uncloaking operations are implemented at the VMM level.

A caveat of Overshadow is that several core features of the operating system had to be reimplemented at the VMM level, increasing the porting and development effort as well as attack surface. The cloaking of the address space at the OS level stops the OS from accessing any memory contents of the application and all system calls that need such access have to be trapped and emulated at the VMM level. In the end, the guest has to call into the VMM for functions like pipes, file access, signal handling, or thread creation. Overshadow showed that it is immensely challenging to remove trust from a higher-privileged component and, to secure the lower level, one has to reimplement several features of the higher-privileged component at an even higher trust level, circumventing the now untrusted component. For Overshadow, several OS components had to be reimplemented at the still trusted VMM level when the OS became untrusted.

Intel Software Guard Extensions (SGX)

Intel SGX [4] allows so-called enclaves to execute orthogonally and protected from the BIOS, VMM, and OS, removing these components from the trusted computing base. An enclave is part of a user-space process that contains a set of pages. SGX guarantees that a software module and its data are protected from the untrusted environment and can compute on confidential data. The pages of the enclave are encrypted to the outside but are accessible inside the enclave. An enclave is initialized by loading pages into a new enclave, followed by an attestation. SGX guarantees integrity and confidentiality but the VMM or OS control scheduling and memory, thereby controlling availability.

SGX was carefully designed to reduce the interaction between the untrusted components and the trusted components. The untrusted components control scheduling and to some extent memory (as the OS/VMM controls how many pages are mapped to the enclave). The user-space untrusted component of the enclave controls I/O to the enclave using a per-enclave I/O channel, e.g., a shared page. The code in the enclave is explicitly designed with this attacker model and assumes that data coming form the outside is not trusted, vetting any incoming data.

The main attack vector against SGX are side channels, trying to constrain the amount of pages available to the enclave on the software side or observing cache line fetches on the hardware side to learn access patterns of code and data in the enclave.

AMD Secure Encrypted Virtualization (SEV)

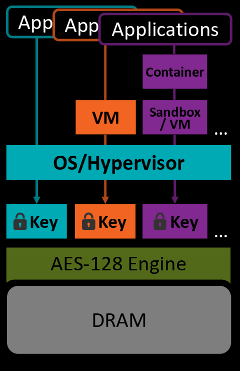

AMD SEV [1] protects the memory of each VM and the VMM using an individual encryption key, managed by the hardware [3]. The VMM therefore does not have access to the decrypted contents of the guest VM. The VMM remains in control of the execution of the guest VM in terms of (i) memory management, (ii) scheduling, (iii) I/O control, and (iv) device management. Both the VMM and the guest VM must be aware of this new security feature and cooperatively enable it.

When the guest enables SEV, there is a form of attestation that certifies, using a root of trust that AMD controls, that the VMM actually plays along and allows this feature (and does not just emulate it using a software implementation on top of, e.g., QEMU). Interestingly, for SEV, the data is encrypted (protecting confidentiality) but not integrity protected. The missing integrity protection allows replay attacks on the crypto level.

In such a mutually-distrusting scheme not all memory can be encrypted and data must be passed between isolated components using some form of I/O channel. In addition, there must be a way to branch somewhere into the other component. The VM obviously needs to request I/O from the VMM and interact with the exported emulated or para-virtualized hardware. In addition, several locations of the VM need to be exposed to, e.g., deliver exceptions and interrupts.

Security discussion

SEV is similar to Overshadow and SGX as it removes trust from a higher privileged component. Overshadow still trusts the memory bus, BIOS, and the VMM while SEV and SGX only trust the AMD or Intel CPU. The two main differences between SEV and SGX is the amount of code that sits in a protected module and the intersection between the two privilege domains. SGX places the trusted enclave outside of all execution domains, orthogonally to existing privilege levels. Any interaction of the trusted enclave with the untrusted system needs to go through a narrow interface that can be carefully vetted. SEV removes trust from the VMM with all the potential downsides that Overshadow experienced as well. All existing code in the OS and the applications are now part of the broader attack surface (side-channel wise) and all interaction between the OS and the VMM may be attacked or observed by an adversary.

While for SGX, there is a clear cut between privilege domains, forcing developers to design a small API to communicate between privileged and unprivileged compartments and the amount of code in the trusted compartment is "as small as possible", SEV seems bloated.

First off, operating systems inherently trust all higher privileged levels. The OS must trust any higher privileged level as, due to the hierarchical design, all depends on that higher level. Breaking this assumption may lead to unexpected security issues.

An SEV domain does not trust the VMM but the VMM may control data that is being passed into the VM and scheduling of the VM. This allows targeted time-of-check-to-time-of-use attacks where data is modified by the VMM after the VM has checked it but before it is being used. This is a very strong attack vector as code has to be designed with such malicious changes in mind. The code of individual device drivers, I/O handlers, interrupt drivers are not designed with an active, higher-privileged adversary in mind. In addition, the code base is large, complex, and grew over many years. An adversary who leverages this attack vector can likely mount a ROP/JOP attack or even inject code to get control of the underlying VM and then simply extract the data through the existing I/O channels or spawn a remote shell. Now, AMD promises to develop virtio drivers for, e.g., KMU to handle at least the data transfer for (some?) I/O devices. What happens to all other interactions remains unclear. Second, the large code base of the VM will simplify information leaks and side channels.

I predict that we as the security community will find plenty of vulnerabilities in this layer now that the trust is revoked. As SEV will be used in secure clouds, the data and code will likely become a high profile target. As a hacker, I only see large amounts of code suddenly being adversary accessible as the definition of what an adversary can do suddenly changed with much more code and data being accessible.

Intel stepped around this problem by placing the enclave orthogonally to all privilege domain, thereby not breaking these trust assumptions of existing code. If you want to use SGX, you will have to design the interface between trusted and untrusted code while being aware of the attacker model. For SEV, the attacker model just changed.

Note that so far only limited information is known and I'm basing my analysis on the publicly available documents. I could be completely wrong about this, so please comment and let me know your thoughts. Overall, this is an interesting technology but I'm weary about the newly exposed attack surface.

Changelog

- 09/24/16 Jethro Beekman (@JethroGB) mentioned integrity attacks against AMD SEV.

- 09/24/16 twiz (@lazytyped) asked to clarify the ROP attack vector.

| [1] | (1, 2, 3) AMD's Virtualization Memory Encryption. Thomas Lendacky. KVM Forum'16 |

| [2] | Brijesh Singh. x86: Secure Encrypted Virtualization (AMD). LKML'16 |

| [3] | David Kaplan, Jeremy Powell, and Tom Woller. AMD MEMORY ENCRYPTION. Technical Report. 2016. |

| [4] | (1, 2) Intel Software Guard Extensions. |